The evolution of digital spaces has given rise to new types of hate speech and online harassment that challenge traditional regulatory frameworks. For instance, meme culture has been co-opted to spread hate speech through seemingly innocuous images that carry deeply offensive connotations, making it difficult for algorithms and moderators to detect and address. Echo chambers and filter bubbles within social media platforms facilitate the proliferation of hateful ideologies by creating insular communities that reinforce extremist views without exposure to counterarguments. These dynamics underscore the adaptability of hate speech and online harassment tactics in the digital age. They necessitate ongoing research and innovation in detection methodologies, alongside a critical examination of the ethical implications of regulating speech in an environment that values freedom of expression. Understanding the multifaceted nature of these phenomena is crucial for developing effective strategies to combat them while safeguarding democratic principles and human rights in digital spaces.

Legal Frameworks Governing Online Speech

Conversely, European countries tend to adopt more stringent regulations on online speech, exemplified by Germany's Network Enforcement Act (NetzDG) and the EU's Code of Conduct on countering illegal hate speech online. These laws mandate proactive measures from platforms to remove hate speech and harassment, imposing significant fines for non-compliance. Such regulatory approaches aim to strike a balance between protecting individuals from harm and preserving free expression, albeit with a greater emphasis on the former. This divergence in legal frameworks highlights a fundamental tension in governing digital spaces: how to effectively address the harms caused by hate speech and online harassment without eroding the foundational values of open discourse and freedom of expression. As digital platforms continue to evolve and cross international boundaries, the challenge for policymakers is to develop cohesive strategies that respect these varying legal traditions while fostering a safer online environment for all users.

Psychological Impacts of Hate Speech on Victims

The long-term effects of such experiences cannot be understated; they have the potential to undermine victims' sense of safety and belonging in digital spaces, fostering a climate of fear that deters meaningful participation and engagement. For marginalized groups, who are disproportionately targeted by hate speech and online harassment, these impacts are particularly pernicious, reinforcing existing societal prejudices and exacerbating feelings of alienation. It is crucial for research and interventions to consider the cumulative burden of these experiences on individuals' psychological well-being, advocating for supportive measures that address both the immediate and lingering effects of hate speech and online harassment. This includes developing robust support systems within digital platforms, as well as broader societal initiatives aimed at promoting empathy and understanding to counteract the divisiveness fueled by hate speech.

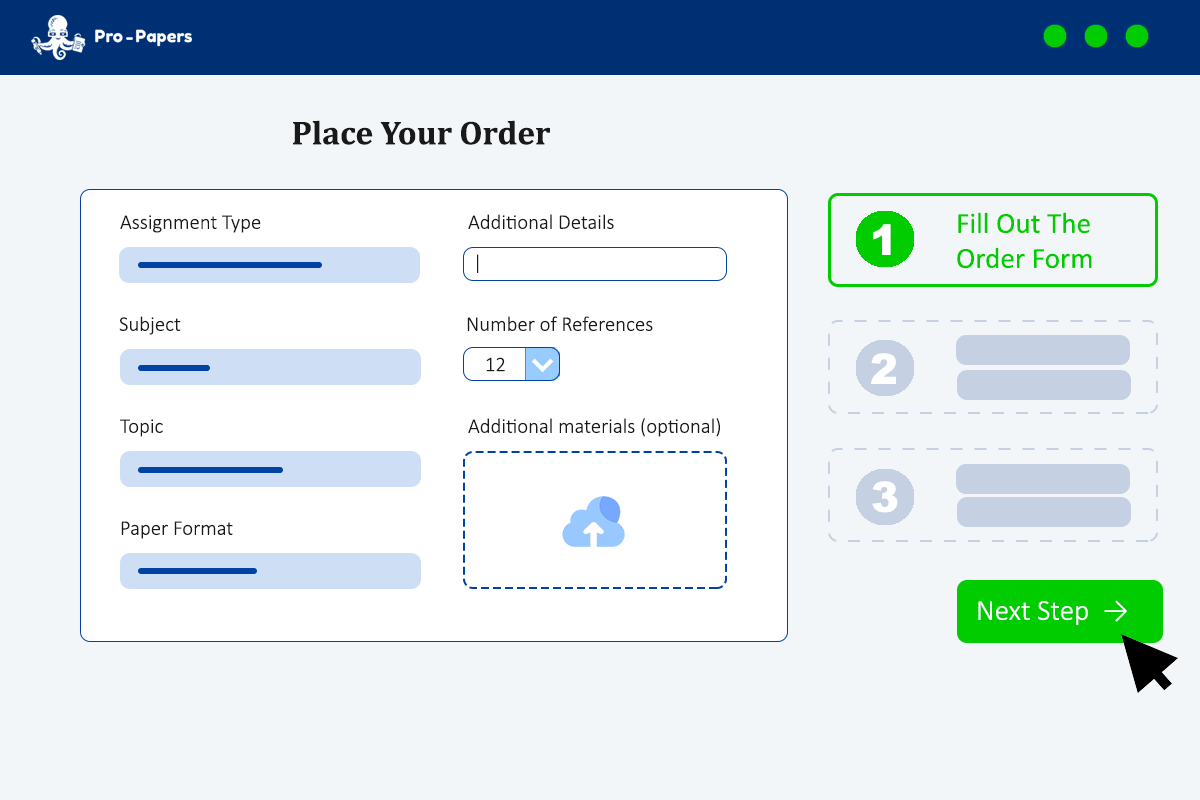

Role of Social Media Platforms in Moderating Content

To navigate these challenges, there is a growing call for social media platforms to engage more actively with stakeholders, including civil society organizations, researchers, and government bodies, to refine their content moderation practices. This collaborative approach aims to create a more nuanced understanding of hate speech dynamics and improve the responsiveness of moderation systems to emerging trends. By fostering open dialogue about their content policies and moderation outcomes, social media companies can build trust with users and demonstrate a commitment to upholding both safety and free speech online. Balancing these competing priorities requires an ongoing reevaluation of moderation practices in light of technological advancements and shifts in societal attitudes toward hate speech and harassment.

Strategies for Combating Hate Speech and Online Harassment

Beyond technological solutions, creating a culture of accountability within online communities is critical. This involves not only implementing clear policies and guidelines regarding acceptable behavior but also fostering an environment where users feel empowered to report violations and support one another. Educational initiatives that raise awareness about the impact of hate speech and online harassment can contribute to a more empathetic online culture. Partnerships between digital platforms, civil society organizations, and policymakers can facilitate the sharing of best practices and promote a coordinated response to these challenges. Combating hate speech and online harassment requires collective action that leverages technology, encourages responsible online behavior, and prioritizes the safety and dignity of all individuals in digital spaces.

Case Studies: Analysis of High-Profile Incidents

Another significant example is the widespread use of social media platforms to disseminate hate speech during the Rohingya crisis in Myanmar. Facebook, in particular, was accused of facilitating the spread of anti-Rohingya propaganda, which contributed to escalating violence and human rights abuses against this minority community. Investigations revealed that hate speech was not only rampant but also appeared to be systematically organized, leveraging Facebook's algorithms to maximize reach and impact. This case demonstrated the potential for digital platforms to be weaponized to incite real-world violence, challenging the narrative that online speech is less consequential than its offline counterparts. It also prompted a reevaluation of content moderation practices and the ethical responsibilities of tech companies in preventing their platforms from being used to perpetuate hatred and violence. These case studies illustrate the urgent need for comprehensive strategies that address both the digital manifestations of hate speech and their tangible impacts on society.